1

2

3

4

5

6

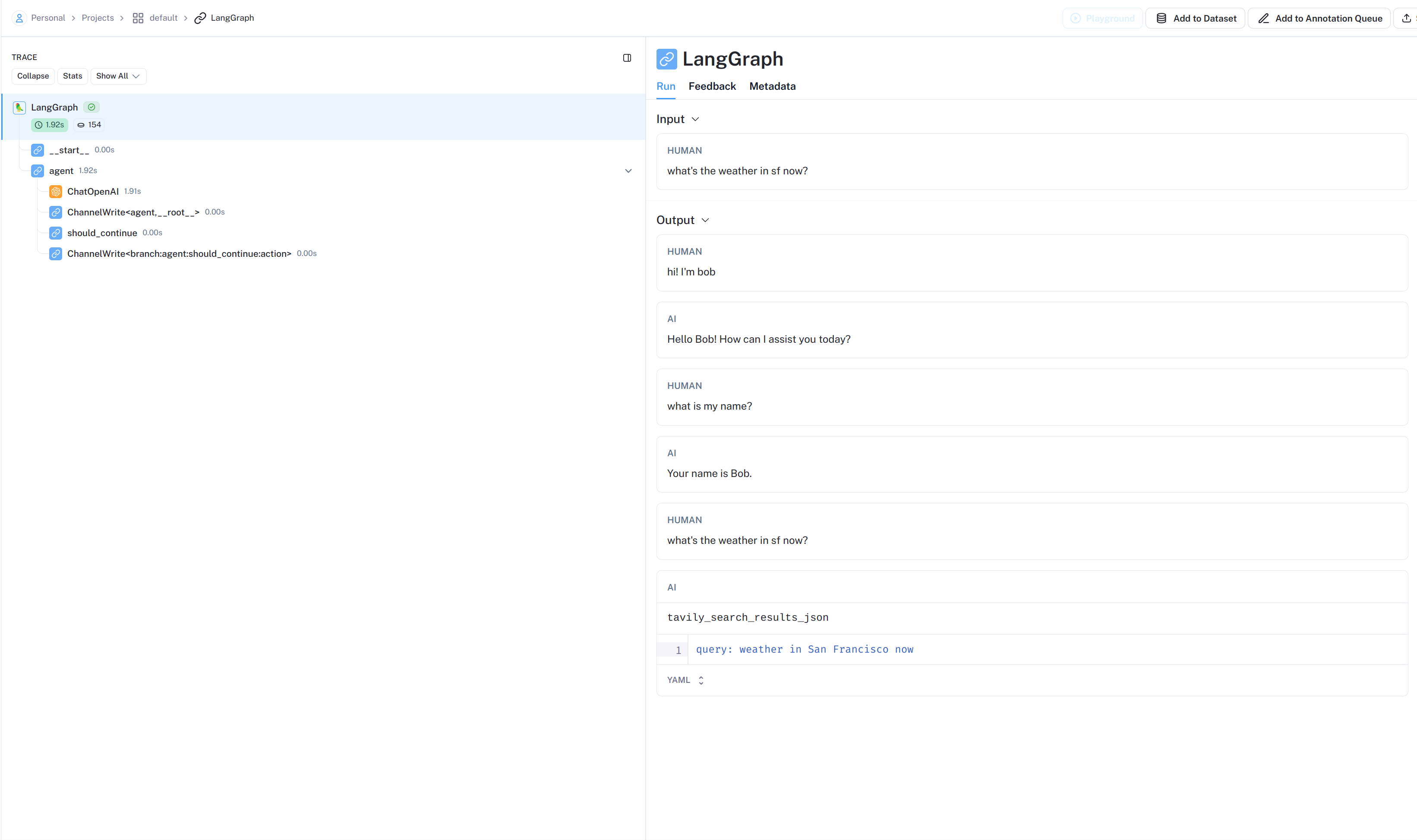

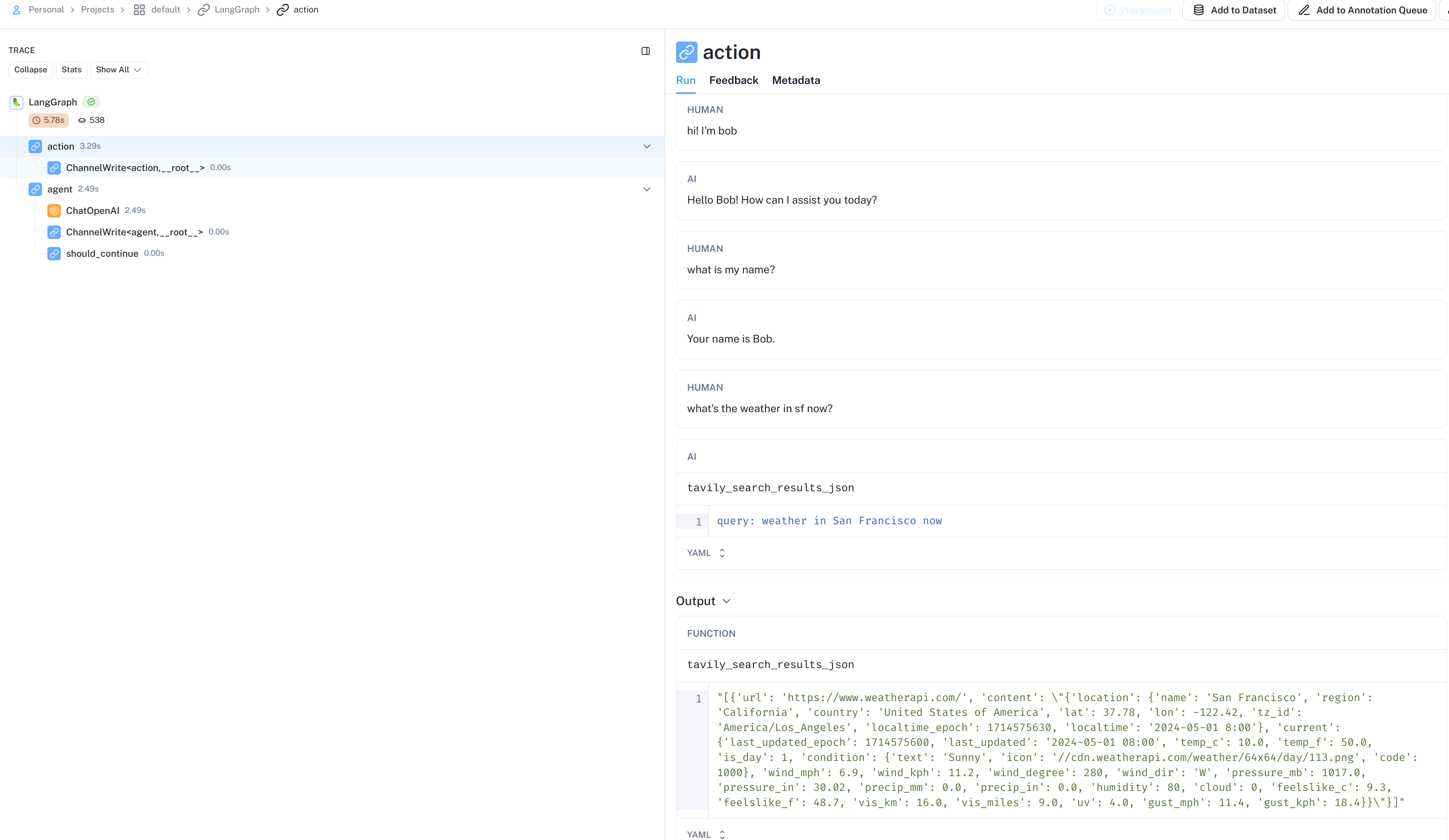

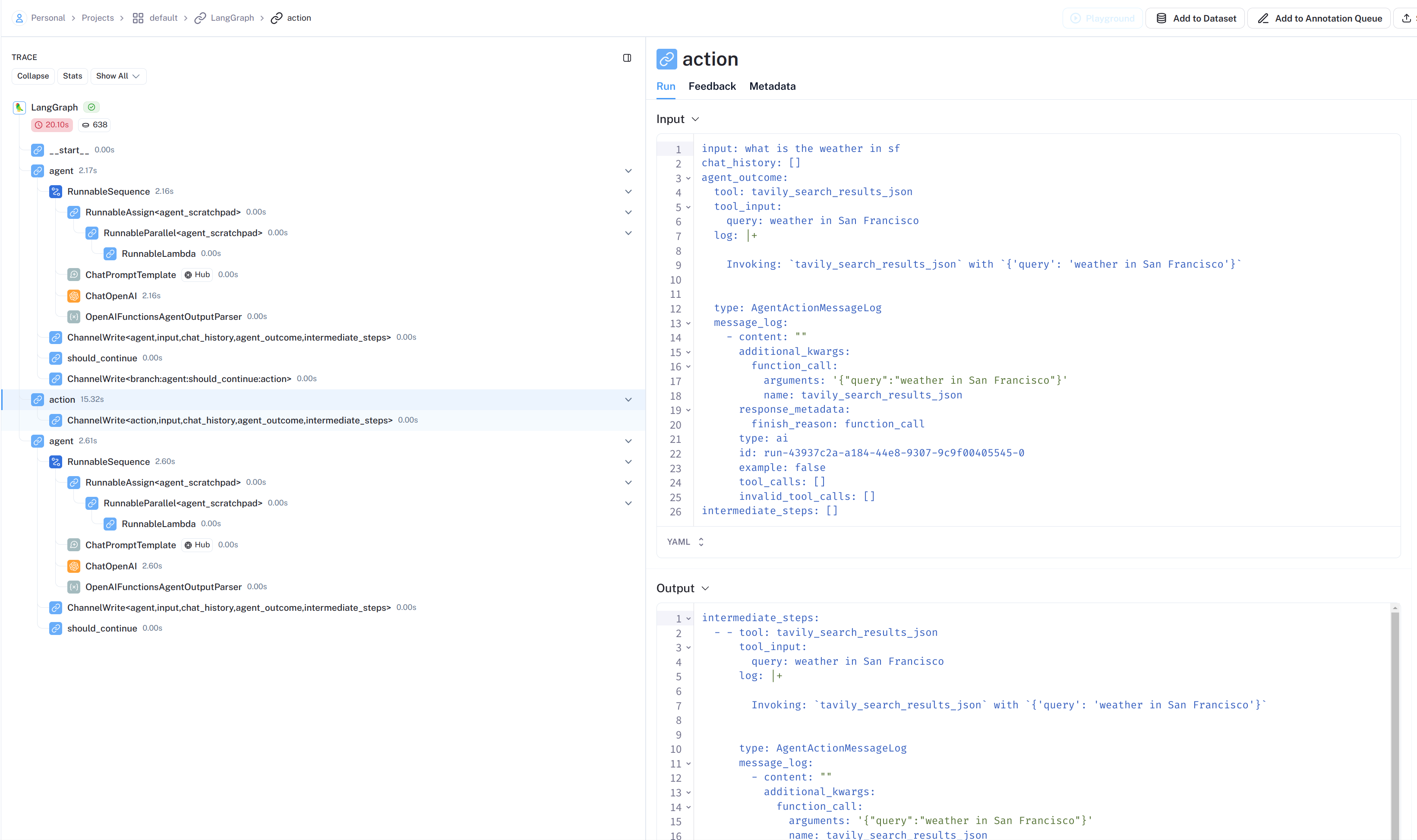

| {'agent_outcome': AgentActionMessageLog(tool='tavily_search_results_json', tool_input={'query': 'weather in San Francisco'}, log="\nInvoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}`\n\n\n", message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{"query":"weather in San Francisco"}', 'name': 'tavily_search_results_json'}}, response_metadata={'finish_reason': 'function_call'}, id='run-43937c2a-a184-44e8-9307-9c9f00405545-0')])}

----

[y/n] continue with: tool='tavily_search_results_json' tool_input={'query': 'weather in San Francisco'} log="\nInvoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}`\n\n\n" message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{"query":"weather in San Francisco"}', 'name': 'tavily_search_results_json'}}, response_metadata={'finish_reason': 'function_call'}, id='run-43937c2a-a184-44e8-9307-9c9f00405545-0')]?y(这个y为人工授权输入)

{'intermediate_steps': [(AgentActionMessageLog(tool='tavily_search_results_json', tool_input={'query': 'weather in San Francisco'}, log="\nInvoking: `tavily_search_results_json` with `{'query': 'weather in San Francisco'}`\n\n\n", message_log=[AIMessage(content='', additional_kwargs={'function_call': {'arguments': '{"query":"weather in San Francisco"}', 'name': 'tavily_search_results_json'}}, response_metadata={'finish_reason': 'function_call'}, id='run-43937c2a-a184-44e8-9307-9c9f00405545-0')]), '[{\'url\': \'https://www.weatherapi.com/\', \'content\': "{\'location\': {\'name\': \'San Francisco\', \'region\': \'California\', \'country\': \'United States of America\', \'lat\': 37.78, \'lon\': -122.42, \'tz_id\': \'America/Los_Angeles\', \'localtime_epoch\': 1714575155, \'localtime\': \'2024-05-01 7:52\'}, \'current\': {\'last_updated_epoch\': 1714574700, \'last_updated\': \'2024-05-01 07:45\', \'temp_c\': 10.0, \'temp_f\': 50.0, \'is_day\': 1, \'condition\': {\'text\': \'Sunny\', \'icon\': \'//cdn.weatherapi.com/weather/64x64/day/113.png\', \'code\': 1000}, \'wind_mph\': 6.9, \'wind_kph\': 11.2, \'wind_degree\': 280, \'wind_dir\': \'W\', \'pressure_mb\': 1017.0, \'pressure_in\': 30.02, \'precip_mm\': 0.0, \'precip_in\': 0.0, \'humidity\': 80, \'cloud\': 0, \'feelslike_c\': 9.6, \'feelslike_f\': 49.4, \'vis_km\': 16.0, \'vis_miles\': 9.0, \'uv\': 4.0, \'gust_mph\': 11.4, \'gust_kph\': 18.4}}"}]')]}

----

{'agent_outcome': AgentFinish(return_values={'output': 'The current weather in San Francisco is sunny with a temperature of 10°C (50°F). The wind is blowing at 6.9 mph from the west. The humidity is at 80%, and there is no precipitation. The visibility is 16.0 km (9.0 miles), and the UV index is 4.0.'}, log='The current weather in San Francisco is sunny with a temperature of 10°C (50°F). The wind is blowing at 6.9 mph from the west. The humidity is at 80%, and there is no precipitation. The visibility is 16.0 km (9.0 miles), and the UV index is 4.0.')}

|