LangChain(七)——Callback

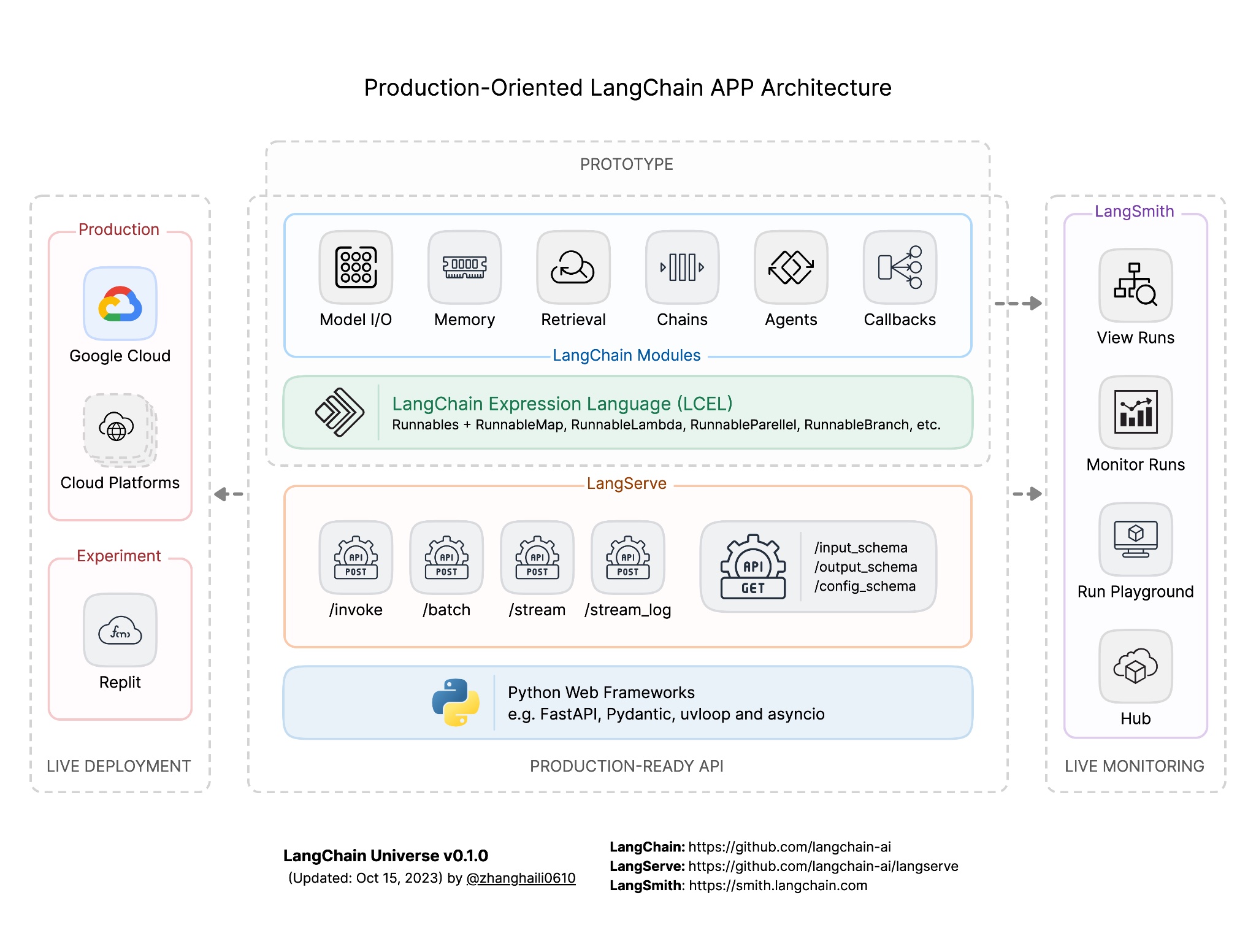

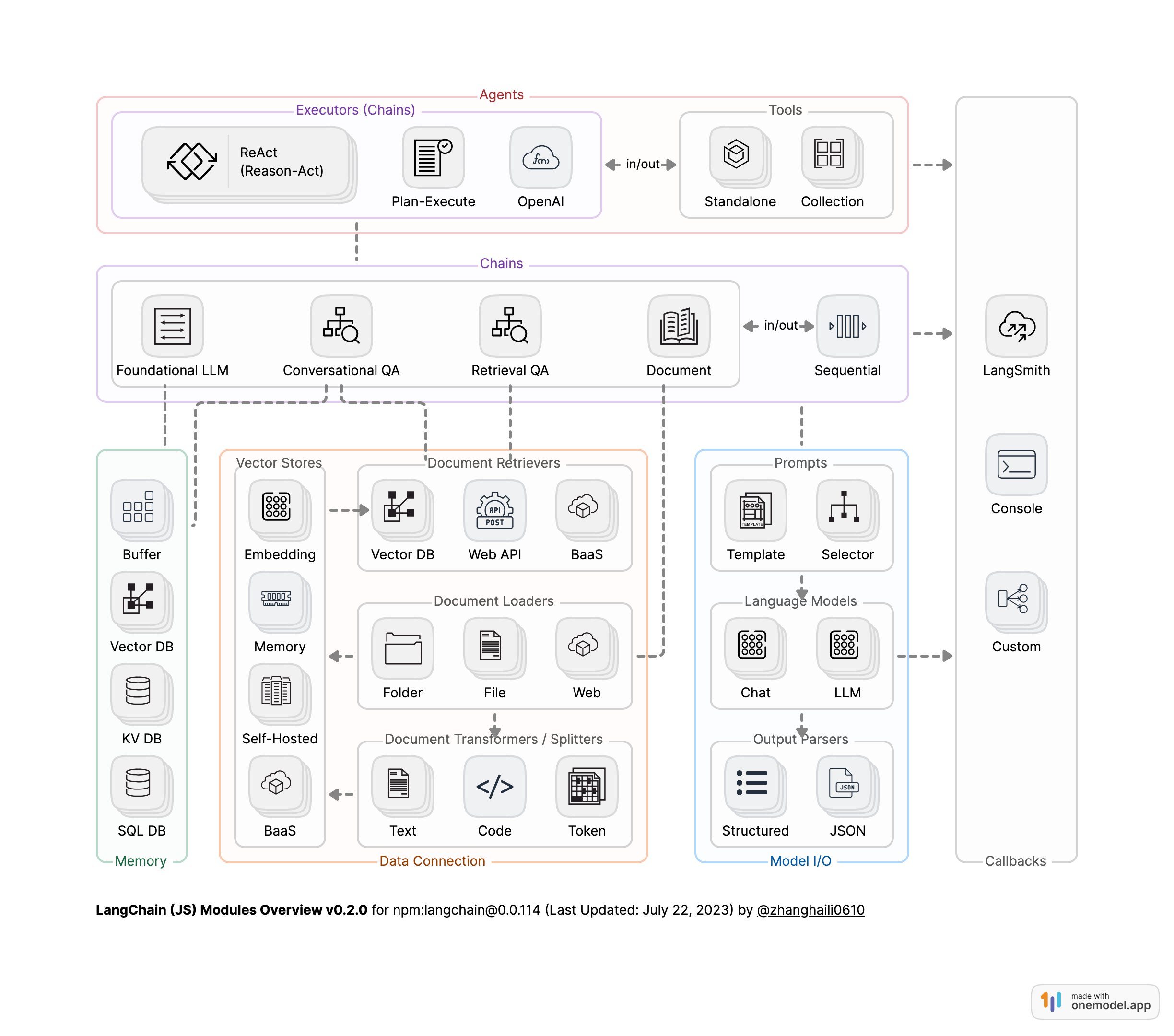

LangChain模块架构图 [1]

一、必不可缺的 Callback 回调系统

Callback 回调系统让我们可以连接到 LLM

应用的各个阶段,这对于日志记录、监控、流传输等非常有用。LangChain提供了一些Callback处理程序,在langchain/callbacks模块中找到。

1.1 最基本的Callback

StdOutCallbackHandler是一个最基本的处理程序,将所有事件记录到stdout,当对象上的verbose标志被设置为

true时,即使没有明确传入,StdOutCallbackHandler也会被调用。

callbacks 参数在整个 API(Model、Chain、Agent、Tool

等)的大多数对象上的两个不同位置可用。

- 可以在构造器位置上接入 Callback(但不能跨对象使用)

- 也可以在模块对象的 invoke() /ainvoke() / batch() / abatch() 实例方法中发起请求时绑定,但仅对该请求有效,以及它包含的所有子请求,回调通过 config 参数传递

1 | |

1 | |

1.2 异步回调

当使用异步API时,建议使用AsyncCallbackHandler以避免阻塞运行循环。异步方法运行llm/chain/tool/agent时使用同步CallbackHandler,也是可以运行的。但是如果你的CallbackHandler不是线程安全的,就会导致问题。

1 | |

1 | |

1.3 自定义多callback handlers(写入文件)

1 | |

输出

1 | |

查看文件内容

1 | |

1 | |

更多Callback说明请参考:https://python.langchain.com/docs/modules/callbacks/

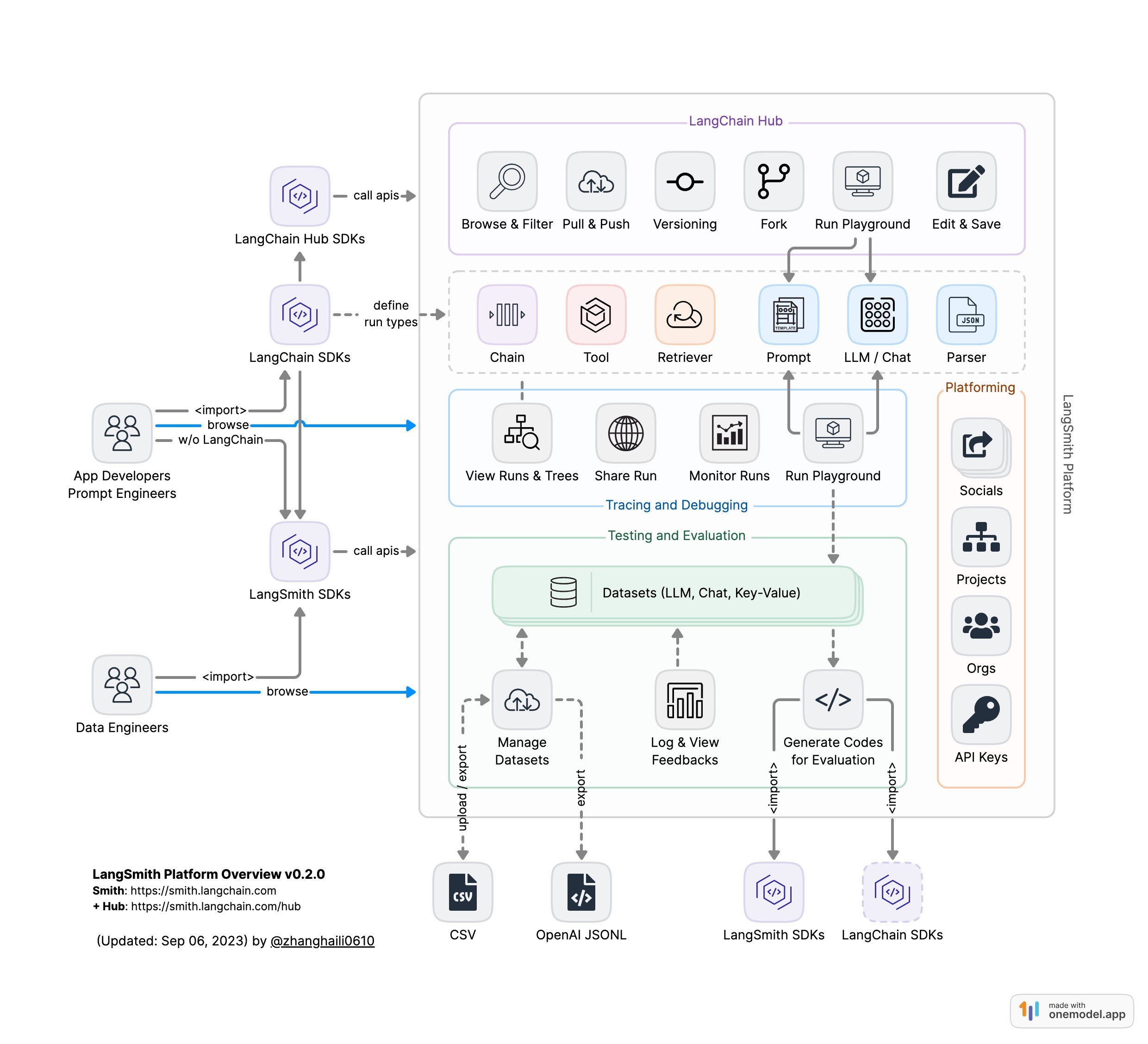

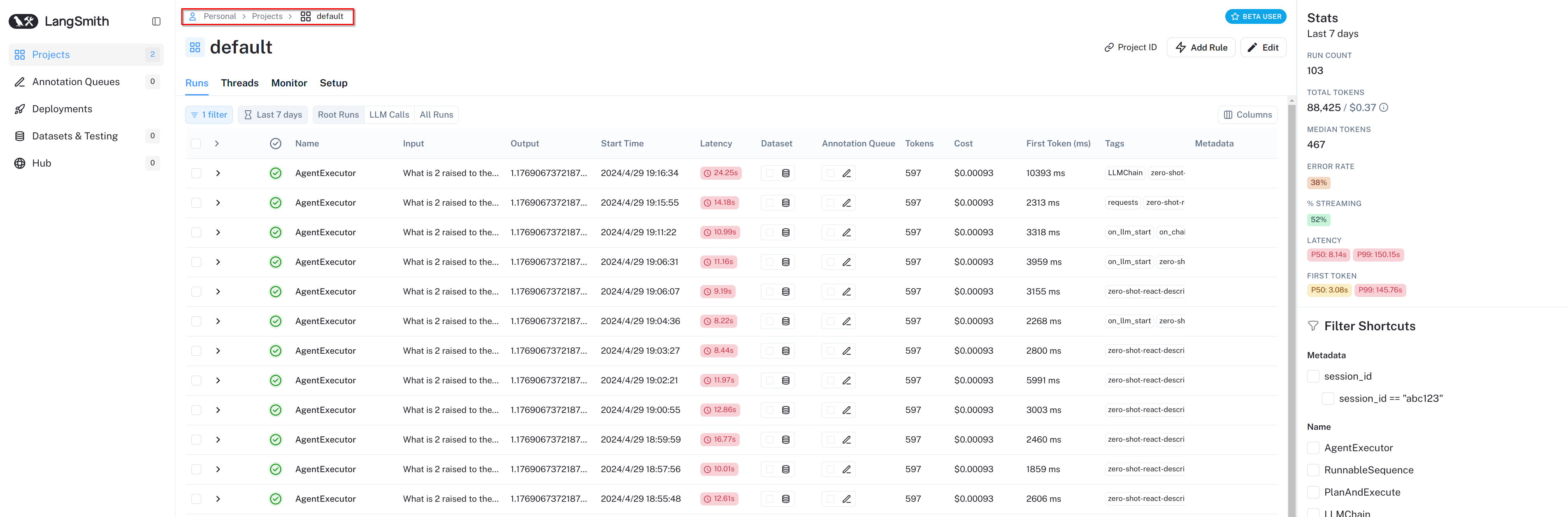

二、LangSmith

LangSmith 是一个用于调试、测试、评估和监控大语言模型(LLM)应用程序的统一平台,由 LangChain 公司推出。

LangSmith

平台[2]

先去LangSmith官网申请API_KEY,申请过后会有一些免费额度。然后通过环境变量可以接入LangSmith

1 | |

然后,就可以执行LangChain对应的代码,之后就会在LangSmith生成对应的Trace,Project默认为default:

LangSmith 核心功能

调试:LangSmith 可以查看事件链中的每个步骤的模型输入输出。这可以方便地试验新链和新提示,找到问题根源,如意外结果、错误或延迟。同时可以查看延迟和 Token 使用情况来定位调用性能问题。

测试:LangSmith 可以跟踪数据样本或上传自定义数据集。然后可以针对数据集运行链和提示,手动检查输入输出或者自动化测试。许多团队发现手工检查有助建立对 LLM 交互的直观感受,从而提出更好的优化思路。

评估:LangSmith 无缝集成开源评估模块,支持规则评估和 LLM 自评估。LLM 辅助评估有潜力大幅降低成本。

监控:LangSmith 可以主动跟踪性能指标、模型链性能、调试问题、用户交互体验等,从而持续优化产品。

统一平台:LangSmith 整合上述功能,让团队无需组装各种工具组合,可以集中在核心应用创造上。

更多功能,请参考官方文档:https://docs.smith.langchain.com/

三、 LangFuse

Langfuse 是一个开源的 LLM 工程平台,可帮助团队协作调试、分析和迭代其 LLM 应用程序。

使用Langfuse,可以去申请官方搭建好的平台,也可以自己搭建平台,通过以下方法搭建:

1 | |

如有报错,可将 docker-compose.yml做如下修改试试:

1 | |

启动完成后,在浏览器中打开

http://localhost:3000,注册帐号并登录。然后新建一个project,在新建的project下的setting里新建一个API

KEY,复制对应代码

1 | |

或者

1 | |

运行代码

1 | |

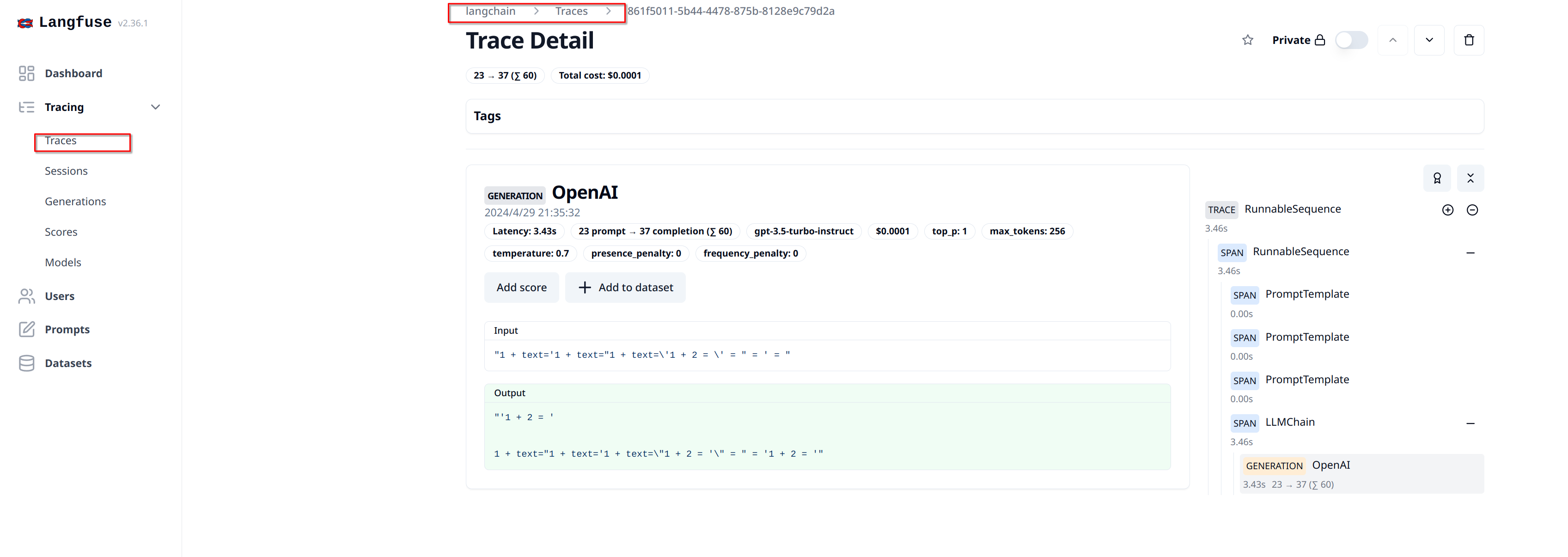

然后再去

http://localhost:3000,在Tracing下的Traces下就可以看到对应的Trace:

Langfuse 核心功能

开发:方便的观测和日志调试能力,使得开发者能够观察并调试应用程序,管理和部署提示信息。

监控与分析:提供应用使用情况的洞见,包括成本、延迟和质量的追踪,以及评分和用户反馈的收集。

测试:在发布新版本前,能够测试应用行为和性能,帮助开发者确保新版本的稳定性。

更多功能,请参考官方文档:https://langfuse.com/docs

四、LangServer

LangServe 用于将 Chain 或者 Runnable 部署成一个 REST API 服务。

1 | |

Server端

1 | |

Client端

1 | |

输出

1 | |

更多功能请参考:https://python.langchain.com/docs/langserve/

LangChain LangServer LangSmith[3]